PDF_export_attempt

0. Tunes Beat Dementia

Reflections

Aleks Reflection

Week 1 and 2:

It was interesting, because normally in Computer Science we had a problem that was given to us, and we had to solve it. In this course we had to pick the problem that we wanted to solve. Now one of the challenges was constantly considering "is this actually needed"/ "will this help dementia patients". Thoughts such as "to what extent can we help" was also something that would appear in my mind. Or we had ideas about creating some sort of technology - such as pill dispenser, and we would come to realization that this is not so meaningful afterall. In the context of dementia patients, if they are at the stage of heavily forgetting to take the pills, they need a caregiver either way. And pill dispensing might be complicated for both the patient and the caregiver, and it doesn't bring that much of the positive meaningful value. So in the first weeks, we really had to think about what matters to such patients and caregivers. It was very nice to follow the x-wiki format, as it forced us to first consider stakeholder values. We came up at first with a few bad ideas, but then eventually in week 2 our dancing robot idea got finalized.

Week 3:

Week 3 in terms of content was interesting, because it made me reflect on my own memory. We discussed different memory impairments, and I could see how I am already affected by some of them to some extent. It is because our memory can't be perfect. I also reflected on my own dimensions of recollective experience. In the context of studying and computer science, the topics that stuck with me the most were the ones that I identified myself with to some extent. Most often I also put some emotional value towards events or people that surrounded me while learning some topics. Some topics, like AI, I found from the start slightly disturbing and since I put negative connotations to those I now don't remember a lot from those topics.

Discussing Memory Support Technology also stuck with me, cause it made me realize that we can't have technology for everything. For example, recording all our interactions and even extracting some information from those could be overwhelming. I also realized that some of those technologies are super complex, and memory is such complex process actually. I felt grateful that my brain can do all those processes subconsciously.

Project wise, I feel like we got slightly confused with what we wanted to evaluate. So we were also reiterating on that this week.

Week 4:

Content wise I haven't learned much this week, because all of it was revision of the stuff I learned during other courses already. I had a course of Human Computer Interaction, and for that one we spent a few weeks on learning about different evaluation methods. For that course we also had to do an HREC evaluation.

But the lectures really made us think in terms of our own project. That was the week when we had to formulate our claims into something that was testable. So in that week we created the first draft for that. Besides that, one of my big tasks that week was preparing the presentation slides fro the midterm. I worked on the foundation, but that forced us to reiterate again and put everything together.

Week 5

Time flies, presentation time. I was proud of our slides and we ended up having a clear storyline. It was also nice to put everything together to see what we don't have and what we have. After this week, we were 90 percent done with foundations and specifications. Only in the last weeks, we had to improve those sections based on feedback. But we were fully ready to start evaluating our prototype now.

Week 6

I have never heard of a term ontology until then. Or maybe I have, but I haven't used it in context of computer science. Having ontology as a visual creates a nice and simple overview of the entire system. Besides that we covered topics of inclusivity again. It was nice cause it made us think once again about our system design. However, at that stage we already considered a lot of specificaitons and design patters that we believed our system was as inclusive as possible.

We decided to use the Miro robot for our prototype. We scheduled a meeting to get instructions regarding its use that week.

Week 7

This week we covered even more thoery, and a lot of it related to my bachelor thesis! My thesis was on the topic of theory of mind. That week we further covered how does the robot interact with the environment, so we could go back and reflect on it and see what other design patterns could we implement for our robot. I think around that time we looked more into literature cause we were really thinking how will our robot interact with the outside world.

That week i wrote 2 reflections:

Today we worked with Miro for the first time. We managed to succsessfully connect to Miro but working with controlling the sensors is challanging. Further, to enable connection between AI and Miro, python version 3.8 is required, but Miro only operates with version 3.5 and we cannot update it because this would raise conflict with its sensors.

Week 7 Final touches:

We created the prototype which is actually really cool. Everyone can run it from a computer and it actually talks and interacts with you. As a non AI student I've never done that so it was shocking to me that (mainly Deniz) managed to create one python file that would lead to my computer playing music off my phone and talking to me.

We also conducted evaluation. Each team member did it individually. The participants were shy, but as long as their favourite song is played they would dance a bit. No-one would actually stand up to dance, but they moved a bit with their body while sitting down.

Overall reflection:

I was very happy with our process because it was very iterative. It was quite unfortunate that we couldn't get Miro to work especially since when thinking about the robot we saw the value of it being a physical object that interacts with someone. Now, in our evaluation, we could have made it better by showing recordings of a moving Miro, but that still requires the user to use imagination which is not ideal. But the nice thing about this project was that we focused on this one isolated claim, and the functionality was very simple. And it still made me realise how much we had to think about for such simple design. I think in general, now i have a better way for approaching such projects. I know from the start that i have to really consider personal values (as before I would kind of skip this step). And I also have those nice frameworks for writing our claims in terms of causes and effects. For this course we were kind of blindly following the framework, but now I've build an intuition for it and I can see that this approach works!

Alex's Reflection

Week 1:

I learned that cognition is always situated, and technology should fit naturally into human contexts. Our upcoming project seems very interesting, not just as a technical task, but as a way to design meaningful human-robot interactions.

Week 2:

We chose our prototype as a robot to help with dispensing pills. The lecture on value-sensitive design reminded me that good design balances functionality with human values and motivation. It encouraged me to think about how our system could support user autonomy and engagement.

Week 3:

Learning about human memory helped me see how technology can extend cognitive abilities. As a group, we decided to scrap the first idea and continue with a dancing robot. A companion and staying active made more sense for use-case and are also probably more relevant for the user.

Week 4:

We worked on our wiki. I realized that clear documentation and evidence-based reasoning are key to improving both the design and its credibility. Making the slides also helped me see what we decided on so far and what we are missing.

Week 5:

Presentation (I didn't present because I made slides, so it was easy). Other presentations made me think about points that we mention but didn't think deeply about yet. Also made what we are missing a bit more clear.

Week 6:

Make preparations to work with the robot and divide tasks, not much more new this week.

Week 7:

Work with the robot (Miro) and design the dance partner. Working with the actual robot was very tough. There is a lot you need to be familiar with to actually make the robot do what you want. We think that the robot is mostly for visual purposes and that the voice interaction and music is the backbone of the prototype. Deniz coded the dancing session interaction. In hindsight, I'm not sure why we thought coding Miro is going to be easy. It would make sense that coding and interacting with a robot is hard, otherwise there would have been a lot more of them...

Contributions (Excluding group work):

Initial version of section 1.b.2.

Initial version of the TDP (diagram)

Slides for Specifications (of the first presentation)

Initial results and conclusion

Updated (final) modifications to Design scenario: 2.a1 (final paragraph showing a normal use-case loop). Additions and formatting to 2.b, d.

Overall:

- It made me realize how important it is to first think about why we do something. I knew from other courses where we created human-computer interactions (and from creativity courses) about the importance, but coming up with a new idea on our own (that we all agree with) was way tougher than I expected before this course, probably because I did the why and how separately so far. There is so much to consider when creating an idea, not just the product.

- Robots seem very easy but are way harder that I thought. Making multiple systems work in unison and coordinate was too hard. I was too much of an idealist and didn't really think this would be a problem until we hit it. Sticking to just the music and selecting voice as a medium for the interactions is (to me at least) the most rational way of testing whether the idea has any chance of being practical.

- Breaking apart problems into smaller problems (be it for design ideas, implementation or testing) is so much more important when tasks are seemingly abstract. A very big part of our work felt like understanding what to achieve and then how to achieve it. What to achieve was easier to understand, as the lectures and labs helped a lot with that. But how to achieve it is still hard. Continuous work is probably the only feasible way, as there will always be new problems we didn't think about, but it makes getting started way harder than it is to just keep going once you start. It is also very clear why there is so much work done on the process of creating products, as doing just what feels intuitive or right is never going to be enough.

Also, looking back at it all, we mostly did a custom version of a home assistant. The main differences are that we propose to use a robot rather than a speaker, because it helps maintain human values, and that we consider LLMs as the companion instead of a scripted ML algorithm. Maybe they would sell a lot better if they could make a cheap standing robot that acts like a voice assistant but moves (if you want it to). It would be a lot more engaging, and using local LLMs can make it personalized to your preference.

Deniz's Reflection

Week 1:

I'm excited about the course! I'm quite interested in psychology, and I heard one of the instructors majored in Cognitive Psychology at Leiden University, that's so cool! It was also interesting to discuss how robots should complement rather than replace humans.

Week 2:

We started shaping our idea. At first, we thought of building a robot to help with pill dispensing, but value-sensitive design made us think about what people actually need. We realized that companionship and emotional support might matter more than functionality in this context. That shift made us rethink the project’s purpose, which I think was an important turning point.

Week 3:

We are pivoting towards a dancing companion robot for dementia patients. I find it a great idea to help get the PwDs some physical activity via dancing! We'll probably won't get to test this large scale, with actual dementia patients (I'm assuming that's outside of this course's scope), but I'd really like to observe the effects of such a system, applied in a real scenario.

Week 4:

We mainly worked on xwiki this week. I find the platform quite clunky; but it is a great system to present a report.

Week 5:

We had a presentation this week. Alex and Aleks did the slides, while me and Vladimir presented. it was nice to present, as I got to familiarize myself with all parts of our project, not just the ones that I worked on.

Week 6:

We decided on our prototype. We will be working with the Miro-E robot dog! This will be new for me. I looked up on how to connect to and work on actual robots.

Week 7:

We met up with the team to try to work on Miro. It was a thrilling challange, and quite an experience; although we had mixed results. While we were able to connect to it, and make it move, and create sounds (only sine-waves at selected pitches though), we found quickly that 1) it was really clunky to work with, and 2) technology is quite outdated. so outdated that we found out that we couldn't send LLMs or implement a TTS inside Miro (Miro has Python 3.5 and OpenAI's API only supports Python 3.8 or higher). We instead resorted on implementing a conversational agent that has a listening module, speech module, and a Spotify API to play music (I was so glad that I took the Conversational Agents course last year, as I applied the knowledge I received in that course (and reusing some code from there) to implement this agent, Which we used to conduct the experiments with the participants.)

Overall, I really would have successfully liked to actually work with Miro. I feel a bit disappointed that we weren't able to, but it was also really valuable to gain experience in constructing a digital agent in place of Miro. It was still fun to interact with the robot, and I did feel as if it would work as intended in a real life scenario as well; but I would have liked to have tested it with a moving and dancing miro, since that was the original idea. Perhaps if I were to do this course all over again, I'd research how Miro works beforehand, and plan accordingly. We were kinda confused with the direction we wanted to take as a team, and we sort-of did it as we go; and we did not know that Miro was not capable of what we wanted to do with our project. I don't think we can say much from the results. I really would have liked to have a larger-scope course (maybe a 2-part course over 2 quarters or something) or a proper research project that would let me observe the effects our agent on a larger, more statistically significant population. I also really enjoyed working with my team. I feel like we got along very well, and we very often seemed to be on the same page, and we worked really efficiently. It made the whole course way more enjoyable.

Vladimir's Reflection

Course content:

Week 1: It was quite interesting to hear the emphasis on complementing workers over replacing them, but it is quite tough to consider. A lot of ideas that we tend to have for robots working alongside humans can easily replace workers without that being the goal. Even just something as simple as an automatic "pill dispenser" for people with dementia may already be on the path to replace caretakers if enough of these kinds of ideas are incorporated. In order to make something that truly "complements" caretakers, it needs to add a new kind of activity that is helpful to the people with dementia, perhaps improves their mood, but does not actually disrupt the caretaker's position. But this kind of restriction is still hard to keep in mind. We want the best for both workers and their target audience at the same time, but some ideas, such as a "self-scanner", can be nice for people who are more introverted. But ideas like this are clearly making it so fewer employees are needed at stores (though I have noticed how there are stores out there that actively choose to NOT have a self-scanner). At the end, it's a battle of ethics.

Week 2 (hindsight focused): This was the week in which the main claim structure was shown, utilizing Use Cases, Functions, and Effects. Overall, I found that their incorporation in the use case structure made it very clear in a small overview what is needed to implement a specific feature. In the meantime, the claims that work alongside it make it very clear what makes your product worthwhile, assuming your tests end up agreeing with the descriptions of those claims. The IDP and TDP were quite confusing due to their very similar names yet very distinct goals and would definitely be something to look at again in another project.

Week 3: This topic was quite interesting, though I had never thought about just how many different kinds of memory impairments there could be. Up until now I primarily knew of i.e. dementia, memory loss, and blocking of memories through PTSD, but the existence of distortion-type impairments and even the inability to make both short-term or long-term memories separately is definitely new. There is so much that can go wrong in the brain, it's honestly quite a miracle that it all works so well most of the time. Unfortunately, I think everyone has experienced how memories do tend to fade over time. Perhaps the way all information is ordered could explain this. If everything is stored in some form of "graph", then certain links do need to go, as the graph would otherwise be way too complex, which is unfortunate as I thought the brain should have enough capacity for it. Perhaps there is a way to train yourself to manipulate the way this graph is formed, so you can better organize your memories? Unfortunately, it seems that having other devices doing this work for you is a lot more dystopian, though at the same time, I am not sure if I would be interested in such a device in the first place, since I do not even bother to take pictures of my activities.

Week 4: This ended up being quite a calm week, mainly because a lot of the material is stuff I had already seen prior in HCI. It seems there is quite a lot of overlap! It is quite nice to see, and know, however, that prior experience in testing one field can help a lot with testing another. The methods are essentially the same, such as A/B tests, and likert scales. The affect button was quite interesting, though I would need to know more about how well this actually works. Won't this form of gamification inherently attribute more positive results? I suppose this is why methods such as A/B tests are so important and effective, because even factors like this are taken into consideration during these tests (though that does make comparing different tests may not be very effective if these factors are so prevalant.). After having already done A/B testing in HCI however, a slightly different method could be more interesting experience for this course. (In hindsight, maybe we should have tried the Affect button instead of just doing a Likert scale).

Week 7: This lecture has shown just how everything we do is supposed to tie together in one project, and it has made it very clear just how important each aspect is. It seems that once you have everything written down and set up properly though, it is a very easy framework to modify and update in different iterations, with some incredible results given the right team. While not discussed, it may also make your process a lot easier to digest for involved stakeholders. I was not too sure for the rest of the project, but this week made it very clear just how important all of these steps were. Definitely a process to recommend using in the future for another project.

Course contribution:

In week 1, our group collaboratively discussed what direction we should take for our project. Ideas surrounding patient mood assessments, to handle panic attacks through music play or by calling the caretaker came up, as well as a pill dispenser popped up. However, with the pill dispenser going against the philosophy of complementing, it was discarded. In hindsight, it seems that we underestimated the importance of looking back at this quick start, as we treated it moreso as part of the ideation phase.

In week 2, collaborative work on the Foundation started, where we started to deviate from our earlier ideas to move towards something focused on "music" or "improving one's physical well-being", though the final idea was not obtained just yet. It was here that we started to split up some of the relevant work. My assignment was to assess what AI and ICT technologies were required, and how to use them. Having updated it based on feedback, we end up with an approach focused on LLMs, voice-text translations, and the robot. With the LLM alone, massive privacy concerns start arising on where this data goes, and what would happen if this data were somehow to get "hacked". Especially in the current day, I have seen that people are wary of having a constant spy camera in their home. As such, especially when developing this further, we should keep in mind how we can make people feel safer. With our experiments being so short, I feel like this also was not part of our experiments, and could have negatively affected one's trust if our experiments were longer. This further justifies the need for an experiment to be drawn out over an extended period of time, which should be done if this robot were to be developed further.

In week 3, work on the Specification started. After collaboratively deciding on what ended up being the final project idea, we split up our work, with me working on the Use cases and their respective Claims. This seems to be the "core" of Specification, where all ideas are brought together, and largely determines what the end product looks like. As such, any changes made to design philosophy should be reflected here. The initial format was a bit confusing due to the second part of the table, though this was resolved with the new format. We ended up with a 3-step process: The initial setup, companion mode, and the dancing session. It seems we went overboard with this process, as we only ended up needing to test the dancing session specifically. In the future, it would be useful to restrict the use case section further, and only include that which is relevant to the current/previous iteration's testing procedures. Additional ideas can then be posted externally in either a separate "ideas" section, or some other document.

In week 4, after collaboratively finishing up what was still unclear to us in specification (including the need of some specificity on my part), we split up the task distribution for the presentation, for which I presented the Foundation (including Alice and our caretaker), made by Aleks. This presentation served as a way to solidify our current ideas and start a proper transition to the testing phase. As such, it seems that the impact of holding a presentation should not be underestimated.

After this, work on our Evaluation started. My focus was on the testing procedure itself, while helping out with some of the evaluation. There are quite a few different paths to take. The initial idea was to do some form of A/B test, but it quickly turned out that this was very unpractical. Our robot is focused on a dancing session, where our focus was on evaluating whether people actually benefit from this and wanted to partake. In an A/B test, it was unclear what the B would be. I.e. we could have just gone for the companion mode, but this limits feedback on the dancing session itself, and does not allow for a proper comparison on how one feels physically. As such, a Before/After approach seemed to be more beneficial, especially with our lower sample size. Rather than force-fitting a specific type of test on your application, choosing the right test seems to be quite important.

In terms of our project, it was quite unfortunate that we did not manage to get our robot to work. It seems that robot functionality is quite inaccessible, with Miro's free functionality being quite outdated, and the main useful tool being inaccessible, at least for Miro. As a result, we had to go for a more LLM-focused prototype, though I cannot help but feel like it was a missed opportunity. It is still quite fun to interact with, though it is not as interesting as an actual robot. This has made it clear that we should pay a lot more attention to coding accessibility and time constraints next time around. Robots may sound cutting-edge, but they may not be as easy to work with. The end results were quite interesting, seeing people's proudness go down with use of the robot. I do wonder if this also has to do with robots in general. We have already seen how i.e. chatgpt can actually negatively impact the brain in the long term. The robot does essentially all the work for us, we are just there along for the ride. If we want to make sure people stay proud, then it may be important that the activity is primarily influenced by them, i.e. by making sure that starting the dance session is their idea, and that they are doing the activity with regard for their own benefit.

Final additional contributions (excluding group work):

Feedback updates for 1c, Use cases+Requirements, Evaluation.

Creation of the text for Prototype (not the prototype itself).

1. Foundation

1.a Operational Demands

1.a.1 Situated Activities

Environments:

- Dementia care centers in The Netherlands

- Home

Activities:

- Socializing

- Physical activity by dancing

- Listening to music

Activity: Daily dancing session

Goal: 15 minutes of safe light dancing between 14:30 and 16:30 to counter afternoon lethargy.

Preconditions: Resident not in pain, vitals stable, space clear, quiet hours not in effect, fall-risk band present, mobility aid nearby.

Artifacts: Portable speaker or robot audio, playlist of preferred songs, gait belt if needed, hydration cup visible.

Context constraints: Quiet hours are 13:30–14:30 and after 19:30.

Staffing ratio: 1 nurse per 6 patients in the afternoons. Shared lounge noise threshold is 55 dB.

Nominal steps: Detect window and ask permission with one-click yes or voice yes. Play priming snippet of a favorite track for max 15 seconds.

Offer two options: seated sway or standing steptouch. Mirror one simple move and slow counts if confusion detected. Insert hydration cue at track change. Log duration and resident affect markers.

Breakdowns and refusal handling: If resident says “I already did that,” show today’s simple visual timeline. If mood low or confusion high, pivot to seated option or reminiscence talk about the song. After two refusals in a row, back off and retry after 30 minutes. If agitation cues appear, stop, switch to calming playlist, notify caregiver.

Frequency and duration: Target 1–2 sessions per day, 10–20 minutes each, 5 days per week.

1.a.2 Stakeholders

Stakeholders and Communication

Direct: Residents like Alice, primary caregivers, activities coordinator, family.

Indirect: Care home administrator, physiotherapist, GP, data protection officer, IT support, vendor service, regulator/insurer, other residents nearby.

Core values and tensions:

- Autonomy vs safety: resident choice can conflict with fall risk or hydration schedules.

- Connection vs efficiency: preserving human interaction while reducing workload.

- Privacy vs personalization: richer profiles enable better prompts but increase data sensitivity.

- Family reassurance vs caregiver discretion: families want notifications, caregivers want control of messaging tone and timing.

Communication loops:

- Robot prompts resident and logs outcomes.

- Caregiver dashboard reviews adherence and refusals.

- Family receives weekly summaries, not live surveillance, unless resident opts in.

- Exceptions escalate to nurse in charge. Data protection officer audits access quarterly.

Value stories:

- As a law enforcement agent, I want the robot to keep the patient in a safe environment, where it will not need assistance from law enforcement.

- As a dementia patient, I want to have meaningful conversations with others that don't revolve around my disease, to support my need for interpersonal connections.

- As a dementia patient, I want my physical well-being to be kept in check, so that I can perform my daily activities.

- As a dementia patient, I want to be able to decide what I do each day, so that I feel in control of my life (autonomy).

- As a dementia patient, I want my dementia to worsen at a much slower rate, or not worsen at all if possible.

As a dementia patient, I want to trust myself when making decisions, so that I feel in control of my life (autonomy).

As a caregiver, I want my workload to be alleviated.

1.a.3 Problem Scenario

Alice Cornelius, 78, is in the early stages of dementia and lives in a small Dutch care home. She enjoys 60s pop music and short afternoon activities. Sometimes she forgets that she has already completed a task or that she still needs to do it. Although she can physically perform light exercise, she lacks initiative without reminders. Caregivers spend significant time prompting her to stay active or hydrated.

Problem: Alice often fails to start planned activities due to short-term and prospective memory lapses. This leads to inactivity and increases caregiver workload. The goal is to design a robot that gently reminds and motivates her, while maintaining her sense of autonomy and social connection.

Entry conditions: The resident is physically able to move with light assistance, can communicate with the robot, and shows mild memory loss but remains socially responsive

Exit criteria: Residents begin more daily activities independently (e.g., dancing or hydration) compared to a baseline week. Caregivers spend less time issuing reminders, and residents continue to interact positively with others, showing no decrease in social engagement.

Refusing compliance: The patient may not comply, believing they have already done the activity, the activity is pointless, or they are not in the mood to do the activity. This requires the robot to foster a level of trust, or to somehow convince, or to back off. (For this, the robot could learn about each individual patient.)

1.b Human Factors

1.b.1 Situated Cognition

<Articulate the Human Factors aspects (particularly concerning "Situated Cognition") with literature references, that you are focusing in the Design (Specification) and Evaluation. These aspects provide (part of the) foundation of the claims. Think about aspects like meaningful activity, values, social connectedness, engagement, trust, learning, memory, emotion, stress and interaction fluency.>

Autonomy

Premise: Offering real choices and ask-before-assist reduces reactance and increases initiation.

Robot rule: Always ask permission, present two options, allow easy “not now.”

Resident signals: Accepts prompt, starts task without human help, refusal reason.

Caregiver signals: Fewer direct prompts, fewer escalations.

Primary measures: Self-initiation rate, refusal rate, cue escalations per session.

Meaningful activity

Premise: Identity-linked content increases participation.

Robot rule: Tailor music and tasks to personal history, show why this task matters.

Resident signals: Time on task, positive facial or verbal markers.

Caregiver signals: Less nudging during sessions.

Primary measures: Engaged minutes per session, positive-affect tag count.

Social connectedness

Premise: Light social scaffolding boosts mood and peer contact.

Robot rule: Greet by name, seed simple topics, invite nearby peers.

Resident signals: Number of peer interactions started.

Caregiver signals: Observed quality of interaction.

Primary measures: Peer-interaction starts per session, conversation turns.

Trust

Premise: Clear limits and consistent behavior produce appropriate reliance.

Robot rule: State capabilities, confirm logs, admit uncertainty.

Resident signals: Accepts robot help after one cue, low confusion.

Caregiver signals: Willingness to rely on logs and schedules.

Primary measures: Prompt acceptance rate, short trust check for caregivers.

Learning and memory

Premise: External cues and small implementation intentions aid prospective memory.

Robot rule: Short multi-modal cues, visual today-timeline, single next step.

Resident signals: Starts within 60 seconds of cue, completes step sequence.

Caregiver signals: Fewer step-by-step handovers.

Primary measures: Time-to-start, step completion without human handover.

Emotion and stress

Premise: Fast detection of frustration and quick de-escalation protects engagement.

Robot rule: Detect hesitations, pivot to easier option, retry later.

Resident signals: Agitation flags, early stop requests.

Caregiver signals: Redirection events.

Primary measures: Agitation flags per session, safe early stops.

Interaction fluency

Premise: Low cognitive load improves comprehension.

Robot rule: Short utterances, repeat on request, show-and-say.

Resident signals: “Please repeat” count, misunderstanding count.

Caregiver signals: Corrections needed.

Primary measures: Repeats per session, misunderstanding corrections.

1.b.2 Evaluation Methods

<Articulate the Evaluation Methods with literature references, that can be used to assess the aspects being mentioned in the Operational Demands and Situated Cognition sections. This is used as foundation for the actual Evaluation>

There are 2 main tasks that our product aims to adress. FIrst, there is increasing the autonomy of the patients and secondly we plan to also provide some social interaction to improve their mood.

A very important type of metrics are usability metrics, as discussed by Nielsen[1]. The most important metrics we plan to address 2 metrics:

- Success Rate: We will measure the amount of patients that performed a task by themselves with the aid of the system.

- User Satisfaction. We plan to ask the patients that interacted with the system how satisfied they are, ranging from very unsatisfied to very satisfied.

Another important topic is defined by usability and user experience goals, as presented by Winograd [2]. For this, we plan to use 3 metrics:

- The Cognitive Load: How much mental effort is required by the user. The system will be rated from very easy to use to very hard to use.

- Emotional Impact: Engaging with the system should be a fun and entertaining experience for the users. For this, users will be asked to rate how they feel when interacting with the system, be that happy, bored, annoyed or some other emotion.

- User Engagement: A good system will be used by users because they find it easier to perform a task with help from it. With this in mind, the amount of times and the actual total time users use the system will be measured. Good user engagement is captured by a high amount of uses with long use times.

Given that the system will use AI, there are other important metrics we need to discuss, such as accuracy and robustness [3], but also trust and privacy [4]. Both accuracy and robustness are measured by the system's ability to correctly remember and alert the user of tasks they have to perform, as well as the correctness of the information they provide. Trust will be measured by asking the patients and the caregivers whether they trust the system to properly aid the patient with their daily tasks or not. Privacy should be kept and respected. This is not exactly a metric since privacy should be kept to respect the patient's rights, but it is important to address nonetheless.

Since the evaluation will be conducted with dementia patients, it is important to ensure that all procedures are tailored to dementia care. Dementia patients can become nervous when interacting with unfamiliar individuals. Therefore, during evaluations, it is important that the sessions are conducted by, or in the presence of, a caregiver whom the patient trusts.

Additionally, dementia patients will likely require guidance while completing questionnaires. The identity of the guiding person can influence the patient's mood and responses. Care must be taken to minimize stress and ensure that the evaluation environment is familiar and supportive.

[1] Nielsen, J. (1993). Usability Engineering. Academic Press.

[2] Winograd, T. (2001). Interaction Design: Getting the User's Perspective. ACM Press.

[3] Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). "Why should I trust you?" Explaining the predictions of any classifier. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining.

[4] Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence.

1.c Technology

1.c.1 AI and ICT

Our robot is expected to communicate with its patients when not dancing, ideally in a way that is tailored to its patients. Ideally, its responses are not static, so it feels more human. For this, an LLM is ideal. The LLM will keep in mind the following:

- The patient it is talking to → For this it needs an understanding of its current location, which is handled by the robot itself.

- The music and dances the patient likes → For this, the LLM should be able to activate, remember, and play the tunes the patient likes.

- Any other activities that need to be done → For this, speech should be the method alongside a task list.

- Due to the above, a separate Text-to-speech program should be involved, which states the spoken text. Meanwhile, a Speech-to-text program will allow the patient to communicate with it using their own voice.

- To allow for the above, a microphone and speaker should be included with the robot. As will be described in the Social Robot section, this is already handled.

- A general idea of the patient's personality → For this, it needs experience with the patient. Prior knowledge given by caregivers or family members could also be useful. Through knowledge of the patient's personality, the robot can develop a sense for the Human Factor of 'interaction fluency'.

Risks and mitigations

With the above-mentioned systems, there are a few concerns that should be kept in mind, with the focus being on privacy:

- If the used LLM is connected to a third-party (such as OpenAI), patient data may end up on their servers, which would be a liability. As such, it is important for the LLM to be stored locally on the robot itself. This way, privacy concerns can be handled more effectively. With this in mind, the LLM should operate only with transcripts from the past 3 months. Any data older than this should be removed from its context window. However, a local LLM may be weaker than a public one

- The text-to-speech and speech-to-text programs should be executed locally, without storing any data for the sake of privacy. While transcripts may still be stored on the LLM, this reduces the number of potential security risks.

- With voice-based interaction, the patient may constantly be "heard" by the robot, even when not interacting with it. To make sure sensitive information is not being overheard, there should be a standby mode, which activates whenever the robot is not in use. While in standby mode, the robot will not "listen" to the patient, and the LLM will receive no transcripts. There will also be a way to "wake up" the robot (i.e. by petting it, or whenever the robot wants to initiate a conversation).

1.c.2 Social Robot

For this task we have chosen Miro, the robot dog. While it is a dog, it has been shown that people have greatly enjoyed conversing with it through regular speech: https://www.youtube.com/watch?v=quVza0CED5o . Dogs are also a great companion that can provide warmth to their patient.

We went over the robots with the following ideas:

Pepper is autonomous/can move around and has a tablet

Nao can dance, can do general motions as they have arms and legs.

Navel has expressions (uncanny valley?)

Miro is a dog that can talk https://www.youtube.com/watch?v=quVza0CED5o

Some of us felt like Navel was too uncanny to feel comforting, leaving it out. Pepper and Nao were both valid options, however, we deemed that Miro would be the most comforting option out of the 4 available, which should also be a benefit to our Human Factor of 'trust'. While Miro is not able to move around, we plan to have a personal robot for each room/person, invalidating the need for complex navigation. Moreover, the robot is small enough to be carried if the patient desires to do so and is able to, but this is not advised.

According to their research paper (https://www.researchgate.net/profile/Tony-Prescott/publication/325788257_MiRo_An_Animal-like_Companion_Robot_with_a_Biomimetic_Brain-based_Control_System/links/5b23c635aca272277fb22a5d/MiRo-An-Animal-like-Companion-Robot-with-a-Biomimetic-Brain-based-Control-System.pdf), Miro has the following features:

- Fully programmable

- Clearly a robot, yet has an animal-like appearance (dog).

- Moveable parts: nodding+rotating head, moveable hearing ears, blinking eyes, wagging tail.

- Responds to touch - This allows for the "wake up" measure described in AI and ICT, where petting the dog can wake it up. Since we are focusing on dementia patients who are still able to do activities physically, this is considered a viable option. However, it should be kept in mind when extending functionality to patients with physical limitations.

- Layered control architecture: Fast and slow layers.

- Can "operate with bespoke control systems"

- Has built-in exploration and obstacle-detection - However, as per the paper, it does not have built-in navigation: It simply moves to noise, even if it avoids obstacles. In both the care center and at home, Miro is assumed to have a fixed area, namely either a specific house floor, which is universal for these robots, or a specific care center room. As such, the robot is always assumed to be able to access the patient whenever they are in the vicinity, and complex navigation is not required.

- Considering the video, it has text-to-speech.

2. Specification

3. Evaluation

3.a Prototype

Scope and purpose

Our prototype is focused on assessing whether our claims CL001: Regular physical activity improves PwD physical well-being and CL002: Dance session improves PwD mental well-being hold. As such, its functionality is focused primarily on UC02.1: Dancing Session Start, incorporating RQ01.2: Human-Robot conversation and RQ01.4: Hold dance session. While UC02.2: Dancing Session Clean-Up and UC03.1: Companion Mode are incorporated, they are only done so on a superficial level, as by this point, the experiment will end. Further details on our experiment procedure are explained further in Evaluation: Test.

Procedure

Our prototype procedure is displayed in the following Figure:

An overview of the prototype, showcasing the different functionalities used from start to finish.

In terms of functionality, our prototype utilizes a visual version of the Robot dog Miro, that is operated through an LLM. An actual robot was not used. During the interaction, both the user and Miro interact with each other through speech-to-text and text-to-speech translations respectively, in order to allow for voice communication. To play a song for the dance session, our LLM makes use of the Spotify API, starting/pausing/stopping the song at will. This closely matches our described AI and ICT technologies.

The exact procedure is as follows, based on UC02.1: Dancing Session Start:

1. Miro introduces itself and invites the user to a Dance Session using the previously mentioned communication method.

2. Once the user has accepted, a short discussion is held on the song/genre. Namely, the LLM asks the user if they would be interested in certain genres, such as jazz, rock, pop, or classical. We note the user is not forced to pick one of the given options.

3. Based on the user's response, a song is played using the Spotify API.

4. The song will play until the user interrupts the song by talking to the LLM, allowing them to modify the state of the activity. Here, by communicating with the LLM, the user can i.e. modify the song or choose to stop the activity entirely, which would send the LLM to its Companion Mode state.

Companion Mode. If the user is not in the process of entering the 'Dancing Session' or currently in one, the LLM will be in 'Companion Mode' as a fallback. We note this state was not explored during the experiments.

3.b Test

1. Introduction

With the previously described prototype, we can begin testing. The focus of our test is on CL001: Regular physical activity improves PwD physical well-being and CL002: Dance session improves PwD mental well-being, which are the main claims of our primary use case UC02.0: Dancing Session, more specifically UC02.1: Dancing Session Start. Through a before-and-after testing format with a 5-point Likert scale questionnaire and HR measurements, we assess whether our claims hold.

2. Method

2.1 Participants

Throughout TUDelft, 9 students (5 M, 4 F) were found who aided us in our experiments. We note these are not actual dementia patients, as we had limited access to actual dementia patients during the course of this experiment. While there were no inclusion or exclusion criteria, this puts our demographic around the age range of 18-27 (soft range), with participants having experience in some field in technology.

2.2 Experimental design

Our experiment made use of our prototype's functionality. A before-and-after test was performed to see if our dancing session validated CL002: Dance session improves PwD mental well-being by making them fill in an affect-focused questionnaire before and after the experience. Heart rate measurements were taken in the meantime to assess CL001: Regular physical activity improves PwD physical well-being. Further details are described in Measures.

2.3 Tasks

Experiment host: Inform the user of the experiment, ask the participant to fill in the consent form, ask the participant to fill in the questionnaires, time dancing session length.

Participant: Engage with the robot, wear fitbit device, dance, fill in 2 questionnaires.

Robot: Talk to the participant, encourage the user to dance, hold dance session.

2.4 Measures

We tested two claims, each through their own measures.

CL001: Regular physical activity improves PwD physical well-being: For this, we used a sports device similar to a fit-bit device, namely a Garmin Connect. After activating a workout session on the device, we quantitatively measure their heart rate and calories burned to measure their physical engagement. We also quantitatively measure the time spent dancing through a stopwatch, starting the first lap at the moment a song starts, and ending when the participant finishes the experiment. We expect to see heart rates entering the range of a light or medium intensity activity for our claim to hold.

CL002: Dance session improves PwD mental well-being: For this, we used a qualitative measure: We made a questionnaire featuring questions on a 5-point Likert scale (Strongly Disagree to Strongly Agree) to test one's current mental well-being. The results of the individual questionnaire rounds are averaged per question, allowing for a before-and-after comparison. The questions we asked were as follows, with inspiration taken from the PANAS questionnaire, originally devised by Watson et al. in 1988:

1. I feel motivated

2. I feel proud of myself

3. I am feeling irritable

4. I am feeling inspired

5. I feel miserable

6. I am content

7. I feel stressed

8. I am in a good mood

9. I feel physically exhausted

10. I would like to have a robot dog

11 (Round 2 only). Do you have any additional thoughts on the experience?

For the above questions, the expectation is that values for our positive affect questions, 1, 2, 4, 6, 8, increase after the experiment. Meanwhile, our negative affect questions, 3, 5, 7, should decrease.

Two questions here are not focused on the claim itself. Question 9 is an additional question focused on confirming the physical exhaustion of our other claim, and is thereby expected to increase. Question 10 is an additional question that does not confirm a dedicated claim, but is there to provide insight into people's approval of Miro.

2.5 Procedure

1. We invite the participant to interact with our robot Miro-E. Before starting, they are asked to fill in the informed consent form.

2. If it is accepted, the user will wear the fit-bit device, and the procedure continues.

3. First, the user should fill in the first instance of the questionnaire.

4. The host of the experiment informs the user that they may start interacting with Miro-E at any time.

5. The user greets Miro-E. Here, we have the user inform Miro of their personal interests and proceed from UC03.1: Companion Mode.

6. After introducing each other, Miro-E encourages the user to start a "dancing session".

7. The user requests to start the dance session.

8. Once done, we ask the participant to fill in the questionnaire once more.

2.6 Material

During the procedure we make use of three materials.

- A prototype mimicking Miro-E's functionality.

- A Garmin Connect device, which should be worn by the participant.

- A version of the Informed Consent Form, where it is acknowledged that the participant's answers to a questionnaire, as well as their metrics on a Garmin Connect device will be used for further analysis. The participant agrees that they are doing this of their own volition, and they may leave at any time. Only those participants who agreed to the terms engaged with the experiment. This consent form also highlights that this is focused on people with dementia.

3. Results

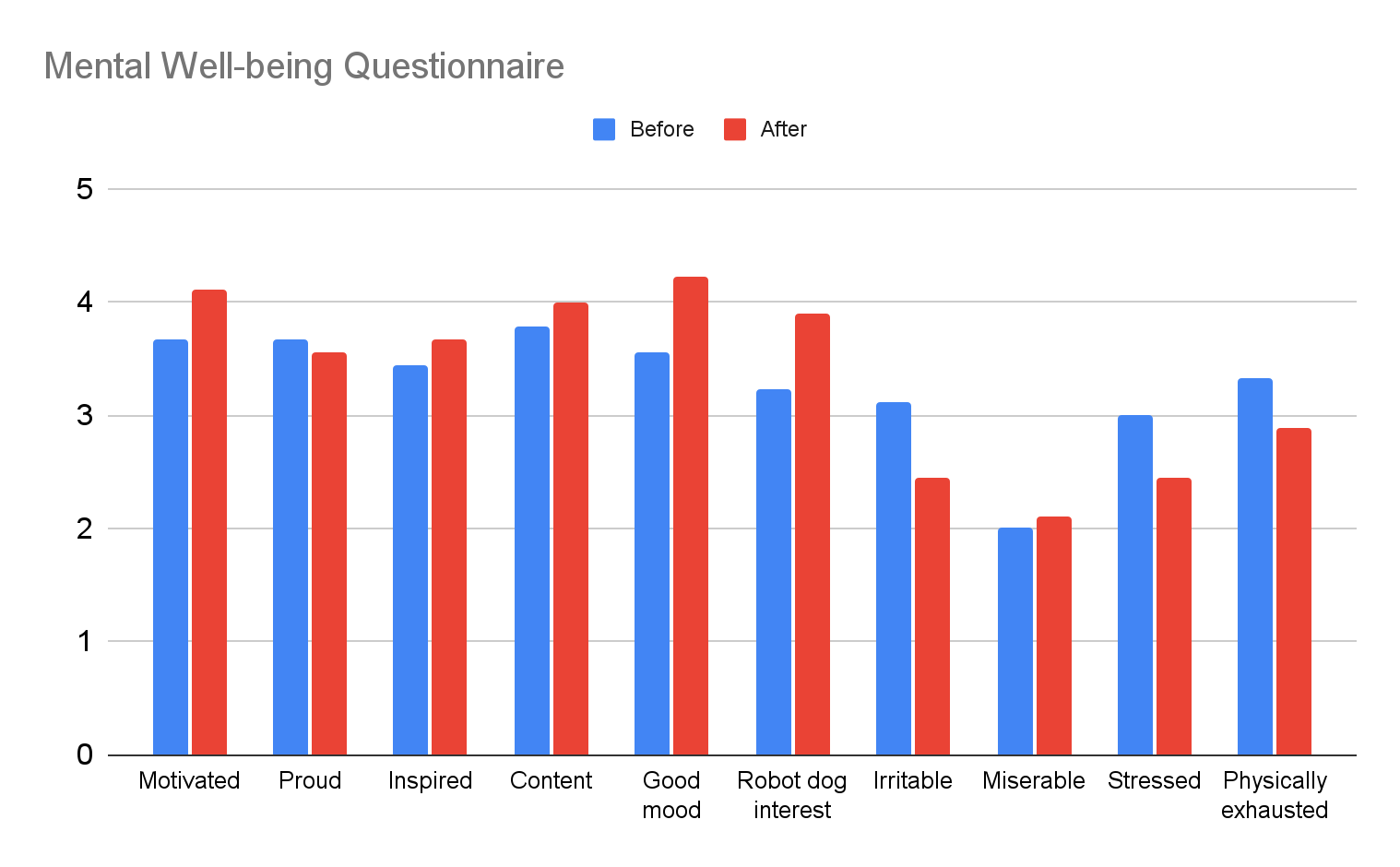

First, we go over CL002: Dance session improves PwD mental well-being. As can be seen in Figure 1, on average interacting with the prototype improved general mood for our participants.

Most of the positive emotions improve after interacting, while most of the negative emotions decrease in intensity after the interaction. This showcases the fact that the prototype mostly improves general mood and helps the participant feel better. It is interesting to note that the biggest gap occurs for Good mood and Stress. Interestingly, Physical exhaustion also decreases after dancing and interacting with the prototype, which we did not expect. The two feelings that worsened after the interaction are proudness and feeling miserable.

Figure 1: Average response for each questionnaire question before and after the experiment.

Next, regarding CL001: Regular physical activity improves PwD physical well-being. Due to only having 1 Garmin Connect device, this experiment was performed on only half of the participants. By trimming the activities stored on the Garmin Connect device to the dancing session, measurements can be obtained for heart rates and calories burned while dancing, such as the following:

Figure 2: Example of a trimmed participant's measurements

On average, we obtained the following results: The average activity heart rate was 103, with the max heart rate ranging from 124-136. This means participants tend to enter zone 2 for a portion of the duration, while usually being in zone 1. The total calories burned was 0-46, averaging at 21. The 0 calories burned was by a participant who did not dance during the entire duration of the dancing session, and instead sat still, listening to music. Finally, during the experiment, the avg music play length was 5:18. We note this was likely reduced due to students being busy.

4. Discussion

If these results had a large sample size, we could say that, given how most feelings positively changed, we believe that the results support our claim: Claim 02: PwD mental well-being improves. The two metrics that decrease are most likely related to the implementation of the interaction. Most participants complained that the voice recognition does not always work well, and that the conversation was very scripted from Miro. The frustration of being misheard paired with a scripted interaction most likely lead to our participants decrease in proudness and increase in feeling miserable. On the other hand, good music and dancing improved the general mood of participants.

Most of the participants did not go all out when dancing. Instead, they slightly moved around and danced without intensity. Based on the approximate calories burned and measured heart rate, they tend to enter Zone 2, often associated with light aerobic exercises. Participants most likely felt energized by the increase in heart rate without feeling the actual effort due to its light intensity, making the activity have the unintentional side-effect of working as a means to wake up properly.

Unfortunately, due to the small sample size and the Technical University background of our participants, it is unclear if these results are valid. More extensive testing on actual dementia patients would need to be performed to fully validate the above findings and our claims. Despite this, these results give early insights into potential benefits and limitations that our experiments contain.

5. Conclusions

Overall, the interaction supports our claim. However, there are still points that can be improved in the future.

Firstly, improving the flow of conversation and the voice recognition of Miro will most likely improve the two metrics that worsened during the experiment. As such, in a future iteration, the quality of voice recognition should become a strong new Requirement. Due to issues with background noise, we propose 'noise cancellation' to be included in this new Requirement.

Moreover, making Miro dance alongside the participant will also most likely help improve the mood by an even bigger margin. It would be interesting to test this with other robots as well. Dancing alongside a humanoid robot might make the participant more comfortable dancing, even in public spaces. To handle this in a future iteration, more extensive analysis on other Social Robots should be done, with the focus on making them compatible with our Use Cases by updating these. A Requirement on the dancing features of robots and their impact may also need to be included, alongside a tested claim to validate whether this impact is positive.

For testing, given the low sample size, testing with more participants will lead to more accurate results. While the current results prove the potential of this prototype, it can not be said with certainty that this is a good enough product for PwD.

Additionally, it may be of interest to try the experiment over an extended period of time, to confirm that the positive impact on participants does not stem from short-term novelty.