Test

Our robot aims to help delay the stage of dementia or slow down the deterioration of memory. The best situation is that we can test the robot with real PwD and in a relatively long time period to see if this robot really works, which is impossible for our project. So our evaluation performs in a group control way. Participants are divided into two groups, group A with the intelligent one, and group B with the dumb one.

The differences between the dumb and intelligent robot are small. The latter tells users the right answer if they get it wrong, while the former only tells them that they have made a mistake.

Problem statement and research questions

The main use cases that the evaluation focuses on are UC001: Daily todo list and UC005: Quiz. Based on the claims corresponding to those use cases, we derive the following research questions:

- Are PwD willing to play the quiz?

- Are PwD happy to listen to music?

- Are PwD happy if they get the correct answer?

- Does PwD enhance their memory of the association between music and activities?

Method

The control group evaluation is used. One group of participants interacts with a dumb robot and another group interacts with the intelligent robot. The only difference between these two groups is the independent variable - dumb or intelligent robot, which makes our result more reasonable.

Besides, Our group decided to use a mixed-method approach for the evaluation.

- Quantitative data will be derived during the experiment such as the number of mistakes the participant makes during the quiz. The participants were also asked to provide a score based on the given system usability scale[1].

- Qualitative data expected to be gathered through questionnaires, such as to what extent participants are satisfied with using the robot, is also adopted for evaluation.

By measuring these two types of data, we will manage to assess if our claims are achieved and the research questions are answered.

Participants

We invited 19 participants. To validate our research question that the quiz will help people better memorize music-activity links, participants will be divided into two groups, Group A with the intelligent robot(9 participants) and Group B(10 participants) with the dumb robot.

Experimental design

The experiment will be conducted to simulate the reinforcement learning process of musical memory related to daily activities and to investigate if the quiz is indeed able to help with the learning.

All participants would sign a consent form that informed them of the usage of the collected data and our goal of evaluations. In our prototype, users can personalize the association between music and activities based on their existing intrinsic knowledge. But due to the limited time and requiring a comparable result between groups, in evaluation, we forced 6 pieces of music and activities.

Participants listened to the music and were asked the remember the associated activities. To this end, they were given a list with the 6 activities. In order to make it more difficult to remember (so they had to pretend less to have dementia), the music corresponding to the activities was played in a different order than that of the activities on the list. Furthermore, the music was quite similar (just instrumental). The users then had 3 minutes to practice with the NAO.

In the end, the participants would take a quiz to see how much they remembered. They are also asked to fill in a questionnaire including the feeling of the robot and possible feedback.

- How many questions did you answer correctly? (Points from 0-6)

- You feel the robot can help you remember the task. (Agree, Neutral, Disagree)

- You feel the robot is annoying. (Agree, Neutral, Disagree)

- Based on the given system usability scale, please give our robot a score. (0-100)

Except for the previous questions, we also collect feedback from participants

- What did you like most about the robot?

- What did you dislike most about the robot?

- Do you have any further suggestions? (*optional)

Tasks

The participants are asked to memorize the association between the given music and activities as best as they can during the play with the robot.

The robot would play the music and ask the participant to answer the correct activity.

In the end, the participant would do the final test and we count the number of correct answers.

Measures

Count the correct answer in the final test.

After the experiment, ask the user to fill in the system usability scale and the questionnaire regarding mood and satisfaction.

Procedure

Event: Quiz

| No. | Group A with the intelligent robot | Group B with the dumb robot |

| 1 | Participants sign the consent form and read the instruction for the evaluation; | Participants sign the consent form and read the instruction for the evaluation; |

| 2 | Participants memorize six pieces of music corresponding with different activities; | Participants memorize six pieces of music corresponding with different activities; |

| 3 | Participants play quiz with the smart robot for three minutes, which will correct the participant when wrong answers are given; | Participants play quiz with the dumb robot for three minutes, which will not correct the participant when wrong answers are given; |

| 4 | Test how well participants remember the music-activity pairs by counting the mistakes made; | Test how well participants remember the music-activity pairs by counting the mistakes made; |

| 5 | Participants fill in the questionnaire and give the feedback; | Participants fill in the questionnaire and give the feedback; |

Material

Robot(NAO) with setting music, consent form, laptop

Results

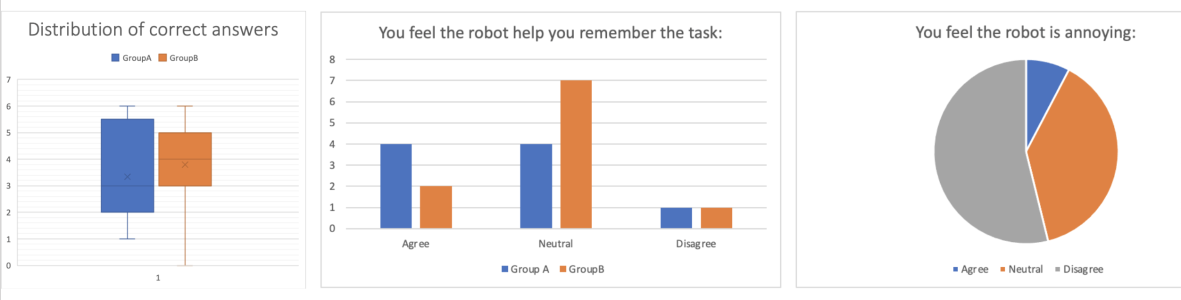

From the left figure, we can see the distribution of the number of correct answers. The average score of all participants is 3.6 among 6 questions. For group A, the average score is 3.3 and for group B the average score is 3.8. This bias can be explained because our group size is not large enough to eliminate the various memory ability. but we can also find that all participants in group A can learn something because they have no 0 scores but several participants in group B got 0 scores. In this degree, we can show that our robot does help in memory.

From the middle figure, we can find that people in group A tend to think our robot can help improve the memory task and only a few of them thought our robot is annoying, as shown in the right figure.

As shown in the above figure, group A with our intelligent robot gave our robot an average score of 66.7, and group B with the dumb robot gave 58.2. In this scale, we can see that participants are more willing to play with our intelligent robot.

Also, we collect some feedback from the participants. Most of them liked the appearance of the robot which is consistent with the reasons we choose the NAO. People are more engaged and willing to interact with a humanoid robot. Some of them complained about the speech recognition of this robot.

Discussion & Conclusion

We assume that our intelligent robot can help people strengthen the association between music and activities. The result of average correct answers didn't approve this. Several reasons existed. First, our participants were not real PwD and their memory abilities vary. Our group size(about 10 for each group) was not large enough. Also, Participants were only given a limited time. The short duration of the quiz and not using personalised music also accounted for this biased result. However, the overall usability score between the two groups and some quantitative results above also shows that our claim PwD are more willing to play with our intelligent robot and PwD are happy to use the robot could still hold.

Besides, our robot was limited by several key factors,

- Due to the limited time and resources, we could not evaluate all the claims that were made in the use cases. This limited the broadness of our conclusion about the effectiveness of the system.

- As mentioned before, the small sample size made the accuracy of the result doubtable. Having a larger and more diverse sample group would allow us to more accurately predict real-world usage.

- The accuracy of the speech recognition system in the NAO and the availability of test subjects and robots also limited the evaluation.

Based on our evaluation, we proved that participants with our intelligent robot are more willing to play the quiz and consider the robot can help them remember the task better compared with the control group. Our robot still needs further improvement based on the previous discussion. In the future, we could improve in the following aspects,

- Test a full implementation of the system in a real setting with PwD.

- Research should also be done to look if the robot is actually necessary, or if the advantage of the system could be achieved by a cheaper alternative, such as a virtual robot on a tablet. (Also inspired by the feedback we got. One participant asked why we didn't create an APP.)

Reference

[1] Bangor, A., Kortum, P. T., & Miller, J. T. (2008). An empirical evaluation of the system usability scale. Intl. Journal of Human–Computer Interaction, 24(6), 574-594.